mirror of

https://gitlab.com/manzerbredes/paper-lowrate-iot.git

synced 2025-06-06 14:47:39 +00:00

16 KiB

16 KiB

Run simulations

To run all the simulations, execute the following call:

Experiments

Sensors Position

<<singleRun>>

simKey="SENSORSPOS"

for sensorsNumber in $(seq 5 5 20)

do

for positionSeed in $(seq 1 10)

do

run

done

doneSensors Send Interval

<<singleRun>>

simKey="SENDINTERVAL"

for sensorsNumber in $(seq 5 5 20)

do

for sensorsSendInterval in $(seq 10 10 100)

do

run

done

doneBandwidth

<<singleRun>>

simKey="BW"

for sensorsNumber in $(seq 1 10)

do

for linksBandwidth in $(seq 10 20 100)

do

run

done

doneLatency

<<singleRun>>

simKey="LATENCY"

for sensorsNumber in $(seq 1 10)

do

for linksLatency in $(seq 1 1 10)

do

run

done

doneNumber of sensors

<<singleRun>>

simKey="NBSENSORS"

for sensorsNumber in $(seq 1 10)

do

run

doneNumber of Hop

<<singleRun>>

simKey="NBHOP"

for sensorsNumber in $(seq 1 10)

do

for nbHop in $(seq 1 10)

do

run

done

doneSingle Run

simulator="simulator/simulator"

parseEnergyScript="./parseEnergy.awk"

parseDelayScript="./parseDelay.awk"

logFolder="logs/"

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:${NS3_PATH}/build/lib

# Default Parameters

sensorsSendInterval=10 # DON'T GO BELOW 1 SECONDS !!!!!!! Simulator will stay stuck

sensorsPktSize=5 # 1 byte temperature (-128 à +128 °C) and 4Byte sensorsId

sensorsNumber=10

nbHop=10 # Cf paper AC/Yunbo

linksBandwidth=10

linksLatency=2

positionSeed=5

simKey="NOKEY"

run () {

# If another function want to handle simulation (tipically used on g5k)

type -t handleSim > /dev/null && { handleSim; return; }

local logFile="${logFolder}/${simKey}_${sensorsSendInterval}SSI_${sensorsPktSize}SPS_${sensorsNumber}SN_${nbHop}NH_${linksBandwidth}LB_${linksLatency}LL_${positionSeed}PS.org"

[ -f "$logFile" ] && return

local simCMD="$simulator --sensorsSendInterval=${sensorsSendInterval} --sensorsPktSize=${sensorsPktSize} --sensorsNumber=${sensorsNumber} --nbHop=${nbHop} --linksBandwidth=${linksBandwidth} --linksLatency=${linksLatency} --positionSeed=${positionSeed} 2>&1"

local log=$(bash -c "$simCMD")

# Compute some metrics

energyLog=$(echo "$log" | $parseEnergyScript)

avgDelay=$(echo "$log" | $parseDelayScript)

totalEnergy=$(echo "$energyLog" | awk 'BEGIN{power=0;FS=","}NR!=1{power+=$2}END{print(power)}')

sensorsEnergy=$(echo "$energyLog" |awk -F',' 'BEGIN{sumW=0}$1<0{sumW+=$2}END{print sumW}')

networkEnergy=$(echo "$energyLog" |awk -F',' 'BEGIN{sumN=0}$1>=0{sumN+=$2}END{print sumN}')

nbPacketCloud=$(echo "$log"|grep -c "CloudSwitch receive")

nbNodes=$(echo "$log"|awk '/Simulation used/{print($3)}')

ns3Version=$(echo "$log"|awk '/NS-3 Version/{print($3)}')

# Save logs

echo -e "#+TITLE: $(date) ns-3 (version ${ns3Version}) simulation\n" > $logFile

echo "* Environment Variables" >> $logFile

env >> $logFile

echo "* Full Command" >> $logFile

echo "$simCMD" >> $logFile

echo "* Output" >> $logFile

echo "$log" >> $logFile

echo "* Energy CSV (negative nodeId = WIFI, 0 = AP (Wireless+Wired), positive nodeId = ECOFEN" >> $logFile

echo "$energyLog" >> $logFile

echo "* Metrics" >> $logFile

echo "-METRICSLINE- sensorsSendInterval:${sensorsSendInterval} sensorsPktSize:${sensorsPktSize} sensorsNumber:${sensorsNumber} nbHop:${nbHop} linksBandwidth:${linksBandwidth} linksLatency:${linksLatency} totalEnergy:$totalEnergy nbPacketCloud:$nbPacketCloud nbNodes:$nbNodes avgDelay:${avgDelay} ns3Version:${ns3Version} simKey:${simKey} positionSeed:${positionSeed} sensorsEnergy:${sensorsEnergy} networkEnergy:${networkEnergy}" >> $logFile

}Grid 5000

Master Node Script

This code generate and distribute simulation argument to the slave worker nodes and run start their simulations processes:

##### Arguments #####

nHost=20 # At least 20 host x)

nProcesses=3 # Max number of parrallel simulations (don't go too high, your process will be killed (arround 8))

nHours=4 # Reservation duration

simArgsLoc=~/args/ # Don't change this path witouth changing it in workder scripts

finishedFile="$simArgsLoc/finished-microBenchmarks.txt"

logsFinalDst=~/logs/

#####################

# Check

[ "$1" == "subscribe" ] && subscribe=1 ||subscribe=0

[ "$1" == "deploy" ] && deploy=1 || deploy=0

[ "$1" == "-p" ] && progress=1 || progress=0

handleSim () {

[ -z "${argId}" ] && argId=1 || argId=$(( argId + 1 ))

outF="$simArgsLoc/${argId}.sh" # Args file based on host name (avoid conflict)

# Add Shebang

echo '#!/bin/bash' > $outF

echo "finishedFile=\"$finishedFile\"" >> $outF

echo "nProcesses=$nProcesses" >> $outF

echo "logsFinalDst=\"$logsFinalDst\"" >> $outF

# Save arguments

echo "sensorsSendInterval=${sensorsSendInterval}" >> $outF

echo "sensorsPktSize=${sensorsPktSize}" >> $outF

echo "nbHop=${nbHop}" >> $outF

echo "simKey=\"${simKey}\"" >> $outF

echo "sensorsNumber=${sensorsNumber}" >> $outF

echo "linksLatency=${linksLatency}" >> $outF

echo "sensorsNumber=${sensorsNumber}" >> $outF

echo "linksBandwidth=${linksBandwidth}" >> $outF

echo "positionSeed=${positionSeed}" >> $outF

}

# Start subscribe/deploy

if [ $subscribe -eq 1 ]

then

echo "Starting oarsub..."

oarsub -l host=$nHost,walltime=$nHours 'sleep "10d"' # Start reservation

echo "Please join your node manually when your reservation is ready by using oarsub -C <job-id>"

exit 0

elif [ $deploy -eq 1 ]

then

echo "Starting deployment..."

##### Usefull Variables #####

wai=$(dirname "$(readlink -f $0)") # Where Am I ?

hostList=($(cat $OAR_NODE_FILE | uniq))

#############################

# Initialize logsFinalDst

mkdir -p $logsFinalDst

rm -rf $logsFinalDst/* # Clean log dst just in case (it is dangerous but avoid conflict)

mkdir -p $simArgsLoc

rm -rf $simArgsLoc/* # Clean old args

# Add your simulation code block here

<<runNbSensors>>

<<runSendInterval>>

<<runSensorsPos>>

# Distribute argument according to subsribed nodes

cd $simArgsLoc

curHostId=0

for file in $(find ./ -type f)

do

[ $curHostId -eq $nHost ] && curHostId=0

mv -- ${file} ${hostList[$curHostId]}-$(basename ${file})

curHostId=$(( curHostId + 1 ))

done

cd -

# Run simulations

echo "Host who finished their work:" > $finishedFile

for host in ${hostList[@]}

do

echo "Start simulations on node $host"

oarsh lguegan@$host bash g5k-worker.sh &

done

exit 0

elif [ $progress -eq 1 ]

then

alreadyFinished=$(cat $finishedFile| tail -n +2| wc -l)

percent=$(echo $alreadyFinished $nHost| awk '{print $1/$2*100}')

echo "Progression: " $alreadyFinished/$nHost "(${percent}%)"

else

echo "Invalid arguments, make sure you know what you are doing !"

exit 1

fiWorker Node Script

Almost like the single run script but with additionnal code to handle g5k simulation platform (arguments,logs etc..).

export NS3_PATH=~/.bin/ns-3/ns-3.29/

g5kLogFolder="/tmp/logs/"

mkdir -p $g5kLogFolder # Create log folder just in case

rm -rf $g5kLogFolder/* # Clean previous logs just in case

hostname=$(hostname)

# Run simulations with sourced arguments :D

simArgsLoc=~/args/ # Don't change this path without changing it in root scripts

argsId=0

argsFile="$simArgsLoc/${hostname}-args-${argsId}.sh" # Arguments generated by Root Node

curNProcesses=0 # Start with no processes

for argsFile in $(find $simArgsLoc -type f -name "$hostname*")

do

<<singleRun>>

logFolder=$g5kLogFolder # Don't forget override default g5kLogFolder

source $argsFile # Fetch argument

run & # Run async

((curNProcesses+=1)) # Increase by 2

[ $curNProcesses -ge $nProcesses ] && { curNProcesses=0; wait; }

done

wait # Wait until the end of all simulations

cp -r $g5kLogFolder/* "$logsFinalDst" # Fetch log from tmp into nfs

echo $(hostname) >> $finishedFile # Just say I finishedLogs Analysis

To Generate all the plots, please execute the following line:

R Scripts

Generate all plots script

Available variables:

| Name |

| sensorsSendInterval |

| sensorsPktSize |

| sensorsNumber |

| nbHop |

| linksBandwidth |

| linksLatency |

| totalEnergy |

| nbPacketCloud |

| nbNodes |

| avgDelay |

| simKey |

<<RUtils>>

# easyPlotGroup("linksLatency","totalEnergy", "LATENCY","sensorsNumber")

# easyPlotGroup("linksBandwidth","totalEnergy", "BW","sensorsNumber")

easyPlot("sensorsNumber","totalEnergy", "NBSENSORS")

easyPlotGroup("positionSeed", "totalEnergy","SENSORSPOS","sensorsNumber")

easyPlotGroup("positionSeed", "avgDelay","SENSORSPOS","sensorsNumber")

easyPlotGroup("sensorsSendInterval","sensorsEnergy","SENDINTERVAL","sensorsNumber")

easyPlotGroup("sensorsSendInterval","networkEnergy","SENDINTERVAL","sensorsNumber")R Utils

RUtils is intended to load logs (data.csv) and providing simple plot function for them.

library("tidyverse")

# Fell free to update the following

labels=c(nbNodes="Number of nodes",sensorsNumber="Number of sensors",totalEnergy="Total Energy (J)",

nbHop="Number of hop (AP to Cloud)", linksBandwidth="Links Bandwidth (Mbps)", avgDelay="Average Application Delay (s)",

linksLatency="Links Latency (ms)", sensorsSendInterval="Sensors Send Interval (s)", positionSeed="Position Seed",

sensorsEnergy="Sensors Wifi Energy Consumption (J)", networkEnergy="Network Energy Consumption (J)")

# Load Data

data=read_csv("logs/data.csv")

# Get label according to varName

getLabel=function(varName){

if(is.na(labels[varName])){

return(varName)

}

return(labels[varName])

}

easyPlot=function(X,Y,KEY){

curData=data%>%filter(simKey==KEY)

stopifnot(NROW(curData)>0)

ggplot(curData,aes_string(x=X,y=Y))+geom_point()+geom_line()+xlab(getLabel(X))+ylab(getLabel(Y))

ggsave(paste0("plots/",KEY,"-",X,"_",Y,".png"))

}

easyPlotGroup=function(X,Y,KEY,GRP){

curData=data%>%filter(simKey==KEY) %>% mutate(!!GRP:=as.character(UQ(rlang::sym(GRP)))) # %>%mutate(sensorsNumber=as.character(sensorsNumber))

stopifnot(NROW(curData)>0)

ggplot(curData,aes_string(x=X,y=Y,color=GRP,group=GRP))+geom_point()+geom_line()+xlab(getLabel(X))+ylab(getLabel(Y)) + labs(color = getLabel(GRP))

ggsave(paste0("plots/",KEY,"-",X,"_",Y,".png"))

}Plots -> PDF

Merge all plots in plots/ folder into a pdf file.

orgFile="plots/plots.org"

<<singleRun>> # To get all default arguments

# Write helper function

function write {

echo "$1" >> $orgFile

}

echo "#+TITLE: Analysis" > $orgFile

write "#+LATEX_HEADER: \usepackage{fullpage}"

write "#+OPTIONS: toc:nil"

# Default arguments

write '\begin{center}'

write '\begin{tabular}{lr}'

write 'Parameters & Values\\'

write '\hline'

write "sensorsPktSize & ${sensorsPktSize} bytes\\\\"

write "sensorsSendInterval & ${sensorsSendInterval}s\\\\"

write "sensorsNumber & ${sensorsNumber}\\\\"

write "nbHop & ${nbHop}\\\\"

write "linksBandwidth & ${linksBandwidth}Mbps\\\\"

write "linksLatency & ${linksLatency}ms\\\\"

write '\end{tabular}'

write '\newline'

write '\end{center}'

for plot in $(find plots/ -type f -name "*.png")

do

write "\includegraphics[width=0.5\linewidth]{$(basename ${plot})}"

done

# Export to pdf

emacs $orgFile --batch -f org-latex-export-to-pdf --killLog -> CSV

logToCSV extract usefull data from logs and put them into logs/data.csv.

csvOutput="logs/data.csv"

# First save csv header line

aLog=$(find logs/ -type f -name "*.org"|head -n 1)

metrics=$(cat $aLog|grep "\-METRICSLINE\-"|sed "s/-METRICSLINE-//g")

echo $metrics | awk '{for(i=1;i<=NF;i++){split($i,elem,":");printf(elem[1]);if(i<NF)printf(",");else{print("")}}}' > $csvOutput

# Second save all values

for logFile in $(find logs/ -type f -name "*.org")

do

metrics=$(cat $logFile|grep "\-METRICSLINE\-"|sed "s/-METRICSLINE-//g")

echo $metrics | awk '{for(i=1;i<=NF;i++){split($i,elem,":");printf(elem[2]);if(i<NF)printf(",");else{print("")}}}' >> $csvOutput

doneCustom Plots

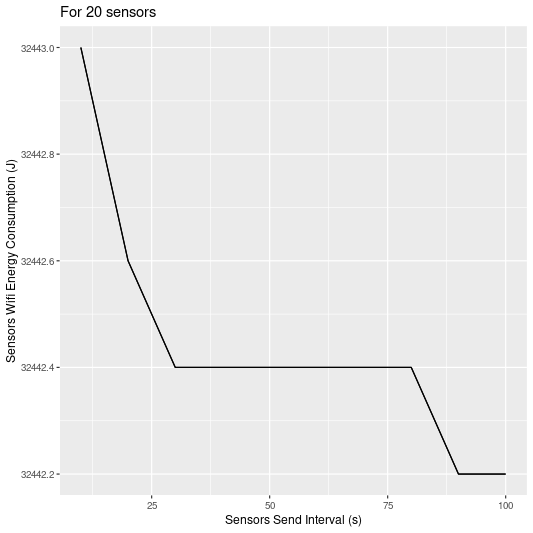

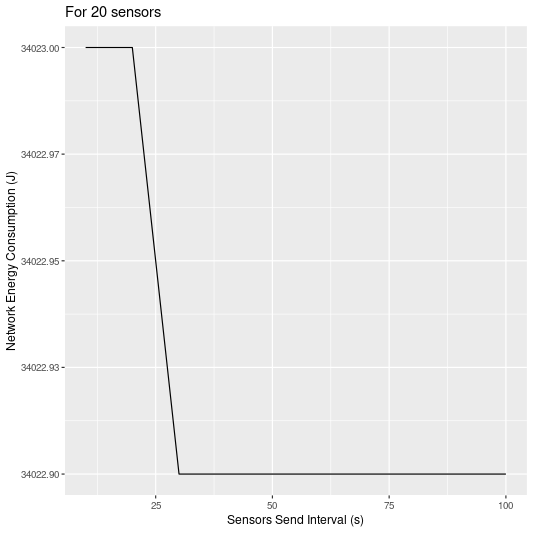

<<RUtils>>

data%>%filter(simKey=="SENDINTERVAL",sensorsNumber==20) %>% ggplot(aes(x=sensorsSendInterval,y=networkEnergy))+xlab(getLabel("sensorsSendInterval"))+ylab(getLabel("networkEnergy"))+

geom_line()+labs(title="For 20 sensors")

ggsave("plots/sensorsSendInterval-net.png",dpi=80)

<<RUtils>>

data%>%filter(simKey=="SENDINTERVAL",sensorsNumber==20) %>% ggplot(aes(x=sensorsSendInterval,y=sensorsEnergy))+xlab(getLabel("sensorsSendInterval"))+ylab(getLabel("sensorsEnergy"))+

geom_line() + geom_line()+labs(title="For 20 sensors")

ggsave("plots/sensorsSendInterval-wifi.png",dpi=80)